The faces behind project EKAmBa

Jasmin Brandt

University Paderborn

Elias Schede

Bielefeld University

Tailoring Algorithms: Better Performance Through the Right Settings

Behind every computer program is a step-by-step guide that tells the computer what to do and when to do it. These guides are called algorithms. Algorithms are like toolboxes filled with instruments designed to solve specific problems. The tools and the order in which they are used to solve a problem successfully are determined by the settings chosen by the user.

As part of the EKAmBa project, we are developing methods for the automated configuration of algorithms. By combining structured search with theoretical approaches, our goal is to create methods that not only find good practical settings for an algorithm but also mathematically guarantee that we’ve found the best possible ones.

Finding the right configuration for an algorithm is like optimizing a race car. To make the car as fast as possible on different tracks, you need to find the right tires, suspension settings, and rear wing position. However, testing all possible combinations of these settings is impractical and expensive. Additionally, these settings interact with each other, so they can’t be considered in isolation.

Our approach automates this process: using machine learning, we leverage past experience from already tested settings to suggest and test new, promising configurations. This way, we gradually move towards the ideal settings while conserving resources like computing power. With the methods we’ve developed, it is possible to tailor a generic algorithm to a specific class of problems. This allows us to optimize both the quality and the time needed to find a solution. Through this configuration process, users can unlock the full potential of an algorithm and ensure optimal performance in specific applications. This enables users to improve both the robustness and performance of their algorithms when faced with new problems.

Our literature review offers a comprehensive overview of the different configurations settings and the available configurators. During the course of the project, we have looked at different problem variants of algorithm configuration and have developed methods for these.

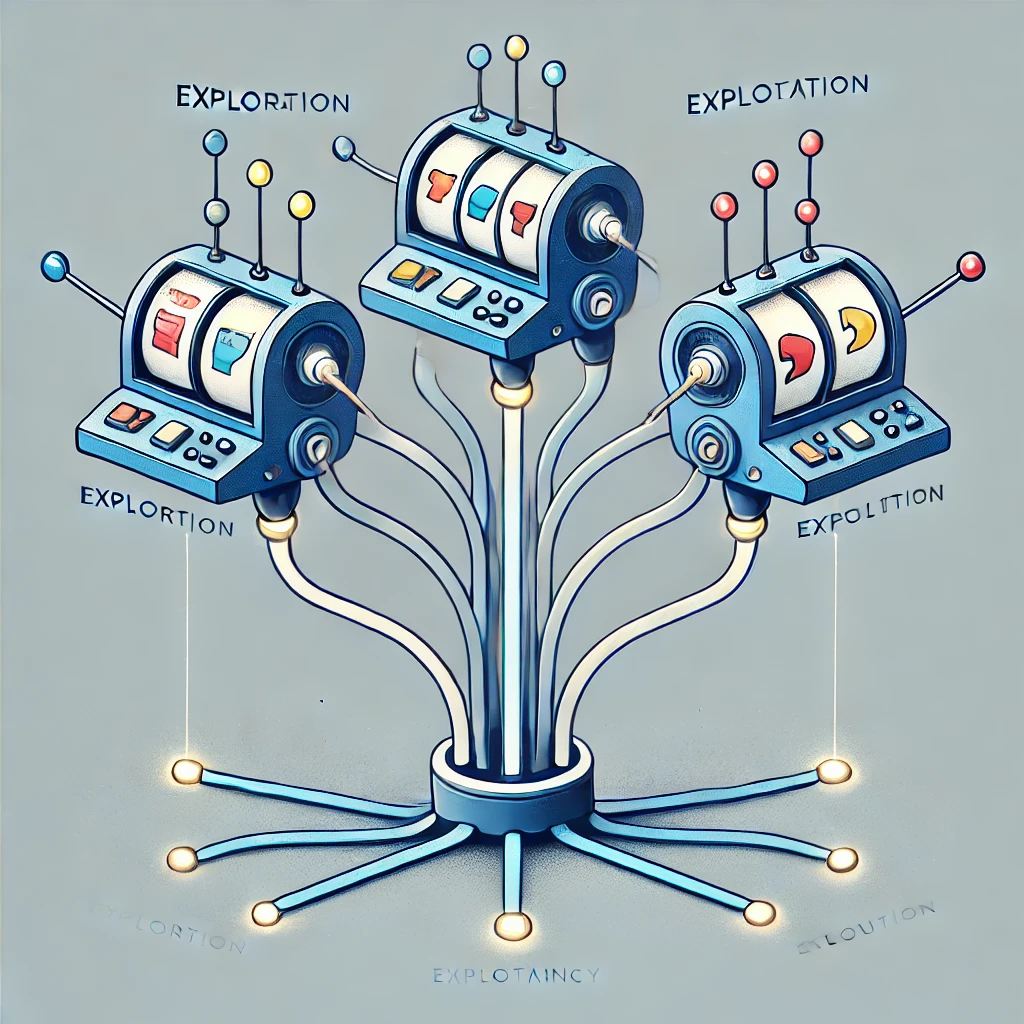

Multi-armed bandits are a popular setting from probability theory in which the goal is to balance between trying new options and reusing those that have proven to work well. A big advantage of applying Multi-armed bandit approaches to algorithm configuration is that we can theoretically guarantee that poor settings are tested only rarely. However, there exist so many different algorithm configuration settings regarding the cost function and the parameter configuration space, that we first had to develop a general Multi-armed bandit framework. This can handle different amounts of parameter configurations that can be executed and compared in parallel, different kinds of cost functions to measure the performance of a parameter configuration as well as different statistics to aggregate the observed costs for a specific configuration [1]. This proposed framework provably finds an optimal configuration if the available budget for executions is large enough. Moreover, our derived necessary budget matches our proven lower bound for this kind of algorithms up to a logarithmic factor.

Multi-armed bandits are a popular setting from probability theory in which the goal is to balance between trying new options and reusing those that have proven to work well. A big advantage of applying Multi-armed bandit approaches to algorithm configuration is that we can theoretically guarantee that poor settings are tested only rarely. However, there exist so many different algorithm configuration settings regarding the cost function and the parameter configuration space, that we first had to develop a general Multi-armed bandit framework. This can handle different amounts of parameter configurations that can be executed and compared in parallel, different kinds of cost functions to measure the performance of a parameter configuration as well as different statistics to aggregate the observed costs for a specific configuration (Brandt et al., 2022).

This proposed framework provably finds an optimal configuration if the available budget for executions is large enough. Moreover, our derived necessary budget matches our proven lower bound for this kind of algorithms up to a logarithmic factor.

In addition, we were able to extend and apply the above-mentioned Multi-armed bandit method to the Algorithm Configuration setting and to transfer most of our theoretical results (Brandt et al., 2023). We have not only proven that our proposed algorithm configurator finds a near-optimal configuration with a high probability if the budget is large enough but also shown empirically that our method narrows down the existing performance gap to heuristic algorithm configurators. The user does not only gain trust in our method that is guaranteed to return a good solution with high probability, but we also have an automatically induced resource efficiency by a derived sufficient budget of our algorithm that almost matches the proven lower bound on the necessary budget that is needed to identify a good configuration in this setting.

Another step to a more resource-efficient algorithm configuration is to adapt existing Multi-armed bandit-based approaches to make their workflow even more resource-friendly while remaining trustworthy with theoretical guarantees (Brandt et al., 2024). In particular, we modified an existing Multi-armed bandit approach and its ex- tension to Hyperparameter Configuration which can be seen as a special case of algorithm configuration to be able to reuse observations from previous runs with a smaller budget. With that, they only need a smaller amount of newly considered configurations while still the same theoretical guarantees hold as running the algorithms from scratch with only new configurations sampled. This allows a more resource-efficient Hyperparameter Optimization while keeping the trust- worthiness with the theoretical guarantees about the quality of the returned configuration.

Anytime algorithms are designed to deliver valid results at any point during execution, refining their solutions as they run. Configuring these algorithms effectively means tailoring them to perform well for different possible runtimes. Our approach focuses on building a configuration portfolio that assigns specific configurations to different time times, ensuring the best possible results throughout execution. Decision makers who often grapple with uncertainty regarding the available runtime can then pick a configuration from the portfolio. The results show that tailoring configurations for specific runtimes is a highly effective strategy, with clear benefits for performance.

Inspired by recent advancements in reinforcement learning, we started developing an instance-specific configurator. This type of configuration enables the user to get specific configurations tailored to the features of an instance. The concept is fairly straightforward: during training, a reinforcement learning agent receives features of problem instances and proposes configurations to try. These configurations are then evaluated on the instances, and the agent gets feedback in the form of achieved runtime or quality. Through this iterative process, the agent learns which configurations work well for specific types of instances. When presented with new instances during testing, the agent uses its learned knowledge to suggest configurations based on instance features. We can show that this approach to instance specific configuration leads speed-ups compared to existing methods.

Additional resources

- Implementation of different bandit methods for realtime Algorithm Configuration: https://github.com/DOTBielefeld/colstim

- Implementation of the algorithm configurator AC-Band:

https://github.com/DOTBielefeld/ACBand

Cooperation

Associated with Prof. Dr. Eyke Hüllermeier, LMU München

Project Publications

- Brandt, Jasmin, Viktor Bengs, Björn Haddenhorst, and Eyke Hüllermeier (2022). ‘‘Finding Optimal Arms in Non-stochastic Combinatorial Bandits with Semi-bandit Feedback and Finite Budget’’. In: Advances in Neural Information Processing Systems (NeurIPS). url: https://openreview.net/forum?id=h37KyWDDC6B.

- Brandt, Jasmin, Björn Haddenhorst, Viktor Bengs, and Eyke Hüllermeier (2024). ‘‘Dueling Bandits with Delayed Feedback’’. In: Proceedings of the DataNinja sAIOnARA Conference, pp. 29–31. doi: 10.11576/dataninja-1163.

- Brandt, Jasmin, Elias Schede, Viktor Bengs, Björn Haddenhorst, and Eyke Hüllermeier (2022). ‘‘Finding Optimal Arms in Non-stochastic Combinatorial Bandits with Applications in Algorithm Configuration’’. In:Proceedings of the Sixth Conference on From Multiple Criteria Decision Aid to Preference Learning (DA2PL).

- Brandt, Jasmin, Elias Schede, Björn Haddenhorst, Viktor Bengs, Eyke Hüllermeier, and Kevin Tierney (2023). ‘‘AC-Band: A Combinatorial Bandit-Based Approach to Algorithm Configuration’’. In: Thirty-Seventh AAAI Conference on Artificial Intelligence, 2023. AAAI Press, pp. 12355–12363. doi: 10.1609/AAAI.V37I10.26456. url: https://doi.org/10.1609/aaai.v37i10.26456.

- Brandt, Jasmin, Elias Schede, Shivam Sharma, Viktor Bengs, Eyke Hüllermeier, and Kevin Tierney (2023). ‘‘Contextual Preselection Methods in Pool-based Realtime Algorithm Configuration’’. In: Proceedings of the LWDA 2023 Workshops, Marburg (Germany), Oktober 9-11th, 2023. CEUR Workshop Proceedings. CEUR-WS.org, pp. 492–505.

- Brandt, Jasmin, Elias Schede, Kevin Tierney, and Eyke Hüllermeier (2021). ‘‘EKAmBa: Realtime Configuration of Algorithms with Multi-armed Bandits’’. In: Workshop on “Trustworthy AI in the Wild” at 44th German Conference on Artificial Intelligence (KI).

- Brandt, Jasmin, Marcel Wever, Viktor Bengs, and Eyke Hüllermeier (2024). ‘‘Best Arm Identification with Retroactively Increased Sampling Budget for More Resource-Efficient HPO’’. In: Proceedings of the Thirty-Third International Joint Conference on Artificial Intelligence, (IJCAI). International Joint Conferences on Artificial Intelligence Organization, pp. 3742–3750.

- Haddenhorst, Björn, Viktor Bengs, Jasmin Brandt, and Eyke Hüllermeier (2021). ‘‘Testification of Condorcet Winners in Dueling Bandits’’. In:Proceedings of the Thirty-Seventh Conference on Uncertainty in Artificial Intelligence (UAI). Vol. 161. Proceedings of Machine Learning Research, pp. 1195–1205.

- Schede, Elias, Jasmin Brandt, Alexander Tornede, Marcel Wever, Viktor Bengs, Eyke Hüllermeier, and Kevin Tierney (2022). ‘‘A Survey of Methods for Automated Algorithm Configuration’’. In: J. Artif. Intell. Res. 75, pp. 425–487. doi: 10.1613/jair.1.13676. url: https://doi.org/10.1613/jair.1.13676.