Goal

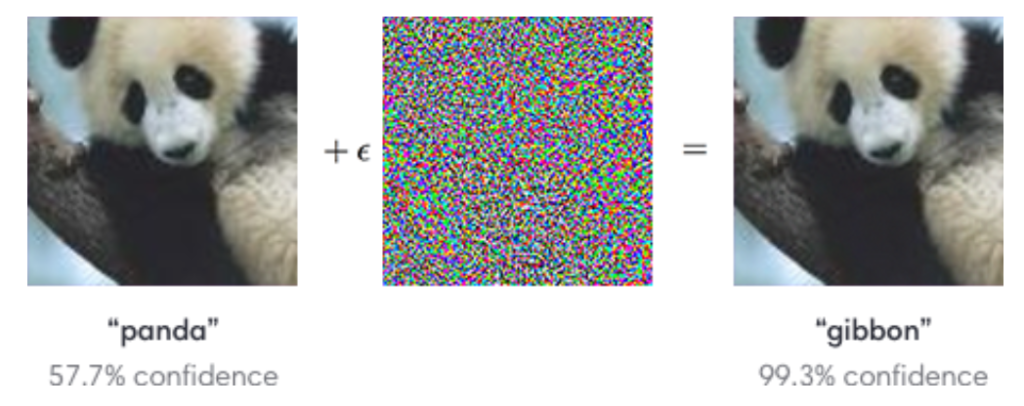

An anomaly is “a person or thing that is different from what is usual“*. Detecting such anomalies in large amounts of data has become a crucial task in many fields, such as cyber-security, fraud prevention, and health monitoring. Motivated by the success in other applications, there has recently been a rise in interest in developing deep learning approaches for anomaly detection. Despite the overall paramount performance of these (deep) neural networks, they are error-prone in general. A common phenomenon when working with neural networks is their lack of so-called adversarial robustness. This means that already small changes in the input can lead to a completely different outcome. Consider, for instance, the example below: There a neural network misclassified the image of a panda bear as a gibbon, after adding a tiny amount of noise to the image.

This lack of adversarial robustness is particularly problematic when the neural networks are deployed in safety-critical applications, where a malfunction has severe consequences and can even cause harm to human life.

The goal of this project is to develop efficient methods to verify (i.e., to mathematically prove) the absence of such an error and thus that neural networks work safely and reliably. As those factors play a major role in building trust in AI systems, the project will ultimately advance the development of trustworthy AI.

Project Overview

This project is part of an interdisciplinary, DFG-funded research unit† on anomaly detection in chemical process engineering. While (deep) neural networks will ultimately achieve superhuman performance, there is a great responsibility to ensure their safety and reliability: Failure to report anomalies may result in imminent hazards to the environment and harm to human life. Conversely, false alarms may lead to substantial financial or scientific loss due to unnecessary downtime of a plant.

In order to verify, and thus ensure, that a neural network functions safely and reliably, we need to first define what “safely and reliably“ means in our context. Therefore, we start by eliciting a set of specifications, i.e., properties a neural network needs to satisfy to operate safely and reliably. These specifications include properties one wants a neural network to fulfill in general (e.g., adversarial robustness) and properties tailored to our specific domain of chemical process engineering. The next step is to formalize these specifications in a suitable specification language to be processed and verified automatically by dedicated verification tools. This task will entail the development of a novel temporal logic that will allow us to also express (and verify) so-called neuro-symbolic properties such as “an alarm has to be triggered when the gas in a given pipe starts to condensate”. Afterward, we plan to develop efficient algorithms to verify neural networks against these correctness properties. We will consider both qualitative verification methods (proving or disproving that a network satisfies a property) and quantitative verification methods (computing the probability of a network satisfying a property). Moreover, we intend to also develop techniques that use counterexamples (obtained from failed verification attempts) to “repair“ neural networks such that they fulfill the correctness properties.

Preliminary Results

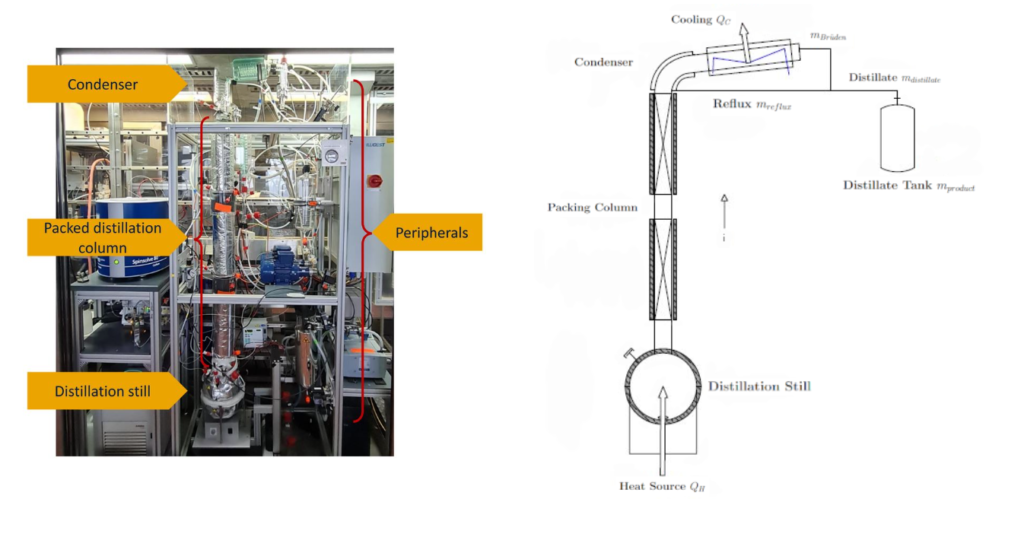

Batch distillation is one of the most important processes in the chemical industry. It uses a so-called distillation column to separate a liquid mixture into multiple, possible unknown chemical components. To this end, the mixture is filled into the distillation still, a vessel connected to a heat source, and heated up until it boils. The uprising vapor will then condense at the top of the column and the distillate can be withdrawn.

Towards eliciting a set of specifications for batch distillation, we worked together with colleagues from the Department of Mechanical and Process Engineering of RPTU Kaiserslautern-Landau. They provided us with expert knowledge and a set of chemical and physical properties that should not be violated during a proper execution of a batch distillation process. From these properties, we derived a set of specifications for our anomaly detectors, e.g., “If the pressure is below or equal to zero, the network should indicate an anomaly“.

The next step was to formalize these specifications in a suitable specification language to be processed and verified automatically by dedicated verification tools. We developed a temporal specification logic by combining Linear Temporal Logic2 and the First-Order theory of Linear Real Arithmetic. The former allows us to model temporal dependencies, while the later allows us to express relational dependencies and real-valued sensor data. From these correctness specifications, we plan to create a benchmark suite that will be essential to evaluate any verification technique present or developed in the future.

Project Publications

- Katzke, Tim, Simon Lutz, Emmanuel Müller, and Daniel Neider (2024). ‘‘Provable Guarantees for Deep Learning-Based Anomaly Detection through Log- ical Constraints’’. en. In: DataNinja sAIOnARA Conference, DataNinja sAIOnARA 2024 Conference. doi: 10.11576/DATANINJA-1174.

- Lutz, Simon, Justus Arweiler, Aparna Muraleedharan, Niklas Kahlhoff, Fabian Hartung, Indra Jungjohann, Mayank Nagda, Daniel Reinhardt, Dennis Wag- ner, Jennifer Werner, Justus Will, Jakob Burger, Michael Bortz, Hans Hasse, Sophie Fellenz, Fabian Jirasek, Marius Kloft, Heike Leitte, Stephan Mandt, Steffen Reithermann, Jochen Schmid, and Daniel Neider (2024). ‘‘A Benchmark Suite for Verifying Neural Anomaly Detectors in Distillation Processes’’. Accepted.

- Lutz, Simon and Daniel Neider (2023). ‘‘Benchmark: neural networks for anomaly detection in batch distillation’’. In: AISoLA, pp. 449–452.

- Lutz, Simon and Daniel Neider (2024). ‘‘Interpretable Machine Learning via Lin- ear Temporal Logic’’. en. In: DataNinja sAIOnARA Conference, DataNinja sAIOnARA 2024 Conference. doi: 10.11576/DATANINJA-1176.

- Lutz, Simon, Florian Wittbold, Simon Dierl, Benedikt Böing, Falk Howar, Barbara König, Emmanuel Müller, and Daniel Neider (2023). ‘‘Interpretable Anomaly Detection via Discrete Optimization’’. In: arXiv preprint arXiv:2303.14111. https://arxiv.org/pdf/2303.14111

Associated Partners

References

- I. J. Goodfellow, J. Shlens, and C. Szegedy. Explaining and harnessing adversarial examples. In Y. Bengio and Y. LeCun, editors, 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, May 7-9, 2015, Conference Track Proceedings, 2015.

- A. Pnueli. The temporal logic of programs. In 18th Annual Symposium on Foundations of Computer Science (sfcs 1977), pages 46–57, 1977.

- *https://dictionary.cambridge.org/dictionary/english/anomaly

- †https://gepris.dfg.de/gepris/projekt/459419731